Aug 11, 2025

At Cabify, we rely heavily on external route providers to deliver the most accurate and efficient navigation experiences. Whether it’s estimating journey durations or calculating distances between pickup and drop-off points, our routing infrastructure needs to be both fast and reliable.

But how do we safely compare different routing providers to validate accuracy, performance, and stability—without affecting our production environment?

In this post, we’ll explore how we use shadow requests in Go to parallelly evaluate external route APIs. This approach enables us to test providers under real-world traffic conditions while keeping the production path untouched. We’ll also cover how we collect deviation metrics to monitor the differences between actual and shadowed responses.

Incorporating a new route provider or validating changes in existing ones can be risky if tested directly in production. Shadow requests solve this by duplicating real user requests and sending them to the new provider in parallel. This fire and forget approach ensures the main execution flow remains unaffected. Crucially, these shadow requests:

This makes shadowing a powerful strategy for continuous validation and experimentation without any user-facing risk.

In our routing system, we apply the Decorator Pattern to enhance the behavior of existing route providers without modifying their internal logic. This is achieved by wrapping the original routes.Provider with a custom provider implementation that adds shadowing capabilities.

type provider struct {

actual routes.Provider

shadowed routes.Provider

...

}

The NewProvider function takes two routes.Provider instances:

actual: the main provider used for production traffic.shadowed: the secondary provider used only for shadowing and comparison.Our custom provider struct implements the same Route method signature as the original provider interface:

func (p *provider) Route(ctx context.Context, request routes.Request) (response routes.Response, err error) {

// custom shadow provider logic

if p.tryAcquireWorker() {

// create a buffered channel to send the main response

respCh := make(chan responseWrapper, 1)

// defer the sending of the response to the channel

defer func() {

respCh <- responseWrapper{response, err}

close(respCh)

}()

// use a goroutine to shadow the request and report metrics

go func() {

defer p.releaseWorker()

p.shadowRequestReport(ctx, request, respCh)

}()

}

return p.actual.Route(ctx, request)

}

This means we can transparently replace any existing provider with our decorated version, and it will still behave the same from the caller’s perspective—except now it also sends shadow requests in the background and logs metrics.

The beauty of the Decorator Pattern here is:

This pattern helps us maintain clean code while enabling powerful instrumentation features safely and independently.

flowchart LR

A["Route request"] --> B["Call actual provider"]

A --> F{"Max workers available?"}

B <--> G["Return response to user"]

G -.-> H["Send main response to channel"]

H -.-> E["Collect deviation metrics"]

F -- Yes --> D["Send shadow request (non-blocking)"]

D --> E

F -- No --> C["Discard shadow request"]

style A fill:#e0e0ff,stroke:#333,stroke-width:1px

style B fill:#e0e0ff,stroke:#333,stroke-width:1px

style G fill:#e0e0ff,stroke:#333,stroke-width:1px

style H fill:#f9f,stroke:#333,stroke-width:1px

style D fill:#f9f,stroke:#333,stroke-width:1px

When a new routing request arrives at our service, we process it in two distinct paths—one that fulfills the user request (the main path), and another that evaluates a secondary provider for comparison (the shadow path).

Here’s how we handle it:

This approach lets us test new routing APIs in production—at scale and with confidence—while still delivering the performance and reliability our users expect.

To safely handle shadow requests in Go, we follow a few idiomatic concurrency practices:

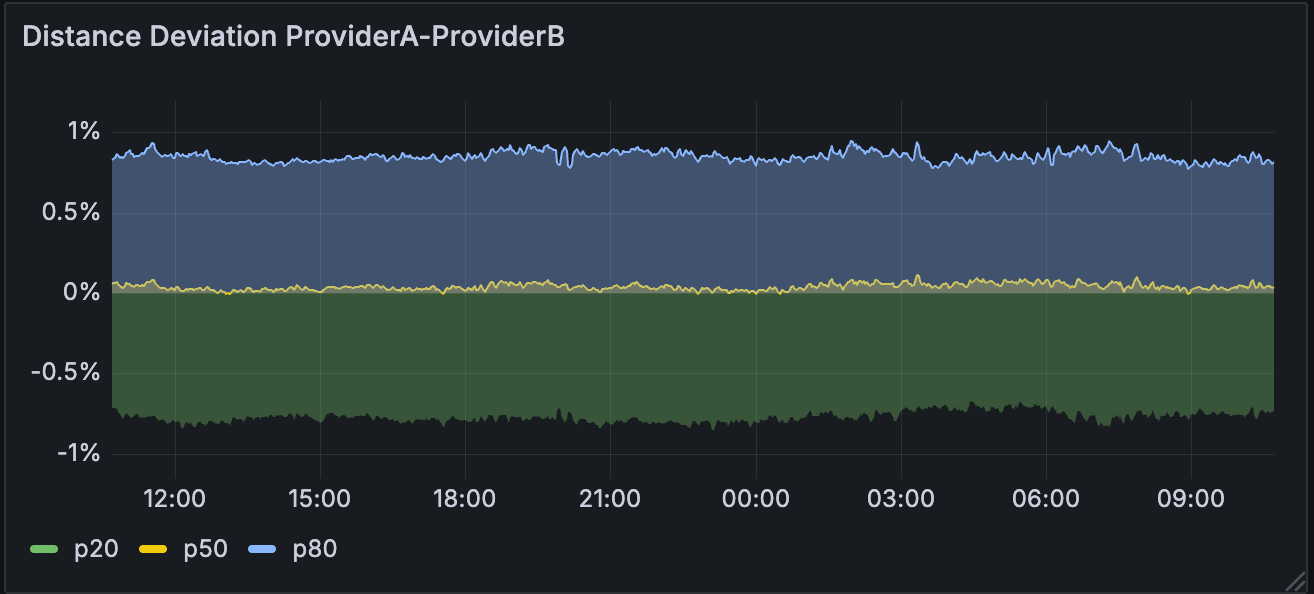

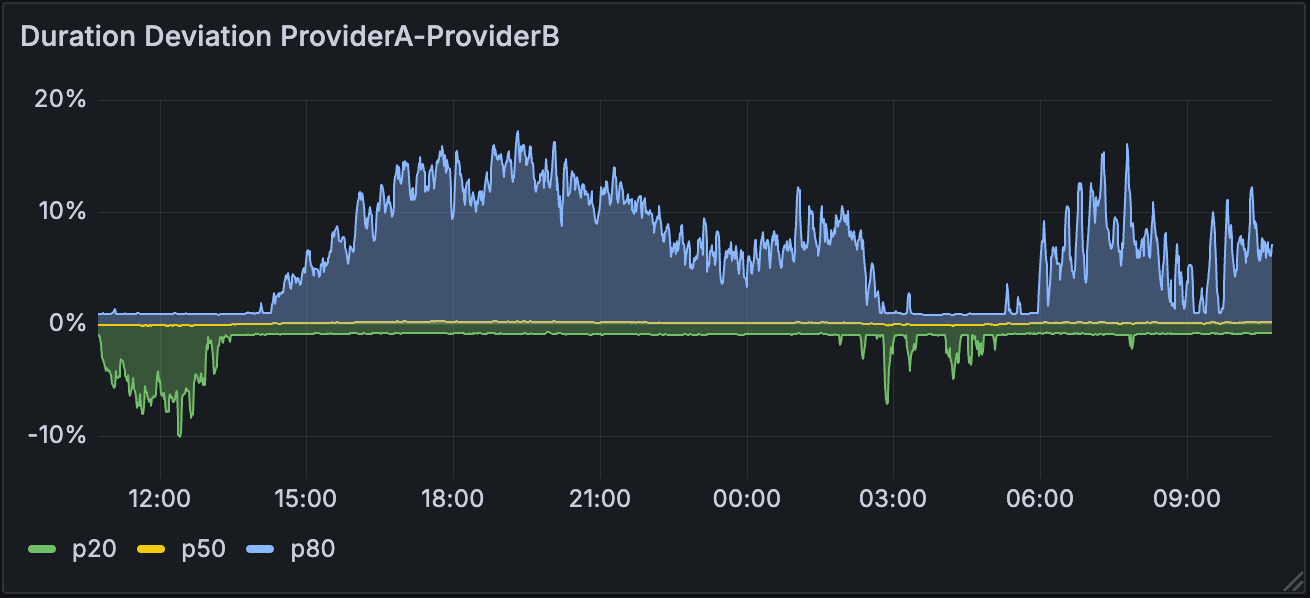

To understand how well a shadow route provider performs compared to our production provider, we capture deviation metrics on every paired request. These metrics help us quantify how “off” the shadow provider is in real-world conditions without ever affecting the user experience.

We focus on three core metrics:

Distance deviation

How much longer or shorter the shadow provider’s route is compared to the actual one.

Formula: (shadowDistance / actualDistance) - 1

Duration deviation

Compares the estimated travel time between both providers.

Formula: (shadowDuration / actualDuration) - 1

Traffic-adjusted duration deviation

Evaluates how both providers handle live traffic conditions.

Formula: (shadowDurationInTraffic / actualDurationInTraffic) - 1

These metrics are collected for every request where both providers return valid routes. In addition, we log all route responses whenever both responses are valid and no errors are present.

All metrics are tagged with contextual labels (e.g., provider name, region, and shadow label) and are exported to our observability platform via OpenTelemetry and Prometheus.

With this data in hand, we can make informed decisions about onboarding, tuning, or discarding a provider—all without impacting the customer experience.

Shadow requests have enabled us to confidently test and evaluate third-party route APIs in a production-like environment—without the risks of direct integration. By instrumenting shadow responses and collecting deviation metrics, we continuously validate the accuracy and performance of alternative providers.

This observability-first approach allows us to make informed decisions on provider usage, all while maintaining system safety and user experience.

Got questions or want to learn more? Join us at Cabify Tech and help shape the infrastructure behind mobility at scale.

Software Engineer