Nov 20, 2025

How can a cloud-hosted CI/CD service build your iOS app when your source code lives in a self-hosted GitLab instance that it is never allowed to see?

This question became our central challenge when we needed to integrate Bitrise’s specialized iOS build capabilities with Cabify’s security-first GitLab infrastructure. While webhook-driven external builds are common in the industry, our self-hosted GitLab instance with strict access policies required us to adapt these patterns in ways that maintained both security and developer experience.

At Cabify, we treat our source code as a shared responsibility. Our self-hosted GitLab instance sits securely behind our firewall, and because anyone in the company can create merge requests and trigger pipelines, granting persistent third-party access would open risks we are not willing to take. Every engineering team owns its security decisions, with the security group acting as advisors and reviewers. This collective model means no external service has continuous access to our code, while still allowing us to collaborate and ship quickly.

This setup did not conflict with modern CI/CD practices themselves. It conflicted with the idea of outsourcing CI/CD to a third-party. Services like Bitrise expect to clone your repo directly, much like GitLab CI/CD does. The difference was that GitLab’s approach was not practical for iOS builds at our scale, but neither could we just hand our repo to Bitrise.

The obvious question: why not just run everything on GitLab CI and avoid the external dependency altogether?

We evaluated this option thoroughly, but the reality of iOS development made it impractical:

After weighing GitLab CI, we decided to buy rather than build. Bitrise gave us what we lacked: managed macOS runners, fast adoption of new Xcode versions, and built-in integrations for iOS distribution. Replicating this in-house would have been far more expensive in engineering time than paying for Bitrise. The real challenge was how to use it safely.

Our solution emerged from a simple principle: use each tool for what it does best, but maintain clear security boundaries.

In this post, I will walk you through how we implemented this philosophy, tackling three key challenges: secure authentication, performance optimization, and comprehensive feedback loops.

The first challenge was adapting the standard webhook-driven build pattern to work within our security constraints. Instead of granting Bitrise persistent access to GitLab, we needed GitLab to initiate builds on our terms.

Standard webhook pattern:

External service detects repository events → clones with persistent credentials → runs workflow.

Our adapted pattern:

GitLab detects the event → triggers build via API → external service clones using short-lived credentials.

This adaptation shifts control from the external service to our internal systems. Rather than Bitrise pulling from us whenever it detects changes, we push build requests to Bitrise in a way compatible with our security policies.

# Simplified curl used from a GitLab CI job

curl -H "Authorization: $BITRISE_TOKEN" \

-H "Content-Type: application/json" \

-d '{

"hook_info": { "type": "bitrise" },

"build_params": {

"branch": "$CI_COMMIT_REF_NAME",

"commit_hash": "$CI_COMMIT_SHA",

"commit_message": "$CI_COMMIT_MESSAGE"

}

}' \

https://api.bitrise.io/v0.1/apps/$BITRISE_APP_SLUG/builds

Why this adaptation? Our security model prohibits long-lived external credentials. By driving builds from GitLab, we maintain control over credential lifecycle while providing external services only the minimum access required: specific commit information and time-limited authentication.

The key to making this work securely was implementing a rotating Personal Access Token (PAT) system:

This gave us the best of both worlds: Bitrise could access our code when needed, but only with credentials that expired quickly and had minimal permissions. Even if a token were somehow compromised, the blast radius would be tiny and short-lived.

With authentication sorted, we hit our second major challenge: performance. Our Rider monorepo is a beast of 7 GB, packed with large binary assets, design files, and years of iOS development. A naive git clone was taking 8 to 12 minutes, turning our “fast” CI/CD pipeline into a coffee break.

This is not unique to GitLab or Bitrise. Any CI/CD system with fresh runners would feel the pain. What we needed were good practices that also happened to make our split GitLab plus Bitrise pipeline much more efficient.

We solved this with what I like to call “strategic laziness”, being smart about what we do not download:

Shallow clone (--depth 100, --no-tags): Skip most Git history while keeping enough depth for merge base detection. We tuned the depth based on our workflow patterns. The --depth 100 setting gave us the sweet spot: deep enough for Git to find common ancestors for most merge requests, shallow enough to avoid years of history.

Git LFS + caching: Move large assets (design files, framework binaries, test

fixtures) to LFS storage. Bitrise’s cache keeps the .git/lfs directory between runs, so subsequent builds only download changed assets.

Result: We went from 8 to 12 minutes down to about 25 seconds from “start clone” to a usable working copy. That is a 20x improvement that transformed our developer experience.

Our third challenge was testing efficiency. Initially, we were running a linear pipeline: clone → build → test unit → test integration → deploy. With over 1,200 tests in our suite, this approach was taking more than 45 minutes per merge request. Developers were batching changes to avoid the wait, which defeated the purpose of CI/CD.

Separating compilation from testing enabled better parallelization. Instead of building and testing in sequence, we built once and fanned out to multiple parallel test runners.

Our workflow follows this pattern:

.xcworkspace used in all subsequent stepsxcodebuild build-for-testing -enableCodeCoverage YES.xctestrun files and compiled test bundles| Group | Suites | Median time | Why this split? |

|---|---|---|---|

| Integration-1 | XCUITest shard 1 | about 8 minutes | Heavy UI automation tests |

| Integration-2 | XCUITest shard 2 | about 8 minutes | More UI automation tests |

| Integration-3 | XCUITest shard 3 | about 8 minutes | Remaining UI tests |

| Unit + Screenshots | All unit tests and snapshot tests | about 5 minutes | Fast, isolated tests |

The key lies in the sharding strategy. Our XCUITests are slower and more resource-intensive than unit tests. Splitting them across three runners allows us to execute them in parallel while keeping unit tests in their own shard.

The resilience factor: If a shard fails, which can happen, especially with UI tests, we can retry just that shard in isolation. Because compiled artifacts are cached on Bitrise, a retry only takes about 8 minutes instead of rerunning the entire 25 minute pipeline.

The transformation: This approach reduced total pipeline time from 45+ minutes to 25 minutes—nearly a 50% improvement—with most execution running in parallel. The pipeline became more predictable, and individual shard failures could be retried in isolation without rerunning the entire build.

We are currently investigating Bitrise’s automatic test sharding feature to simplify this flow, but our manual approach has served us well.

Fast, parallel tests are only useful if developers get actionable feedback. This is where we close the loop by bringing Bitrise results back into GitLab.

We needed to:

Phase 1: Basic Status Updates

We update GitLab’s commit status to show the overall pipeline state:

POST /api/v4/projects/:gitlab_project_id/statuses/:sha

state = success | failed | running

Phase 2: Rich Reporting with Danger

Developers need to know why a build failed, which tests are unstable, and how their changes affect coverage. Instead of running Danger on Bitrise, we trigger a downstream GitLab pipeline dedicated to reporting. This keeps all post-processing tools within the same security perimeter as our source code.

Within that internal pipeline Danger:

.xcresult bundles and coverage reports published by Bitrise.Why delegate? Two reasons:

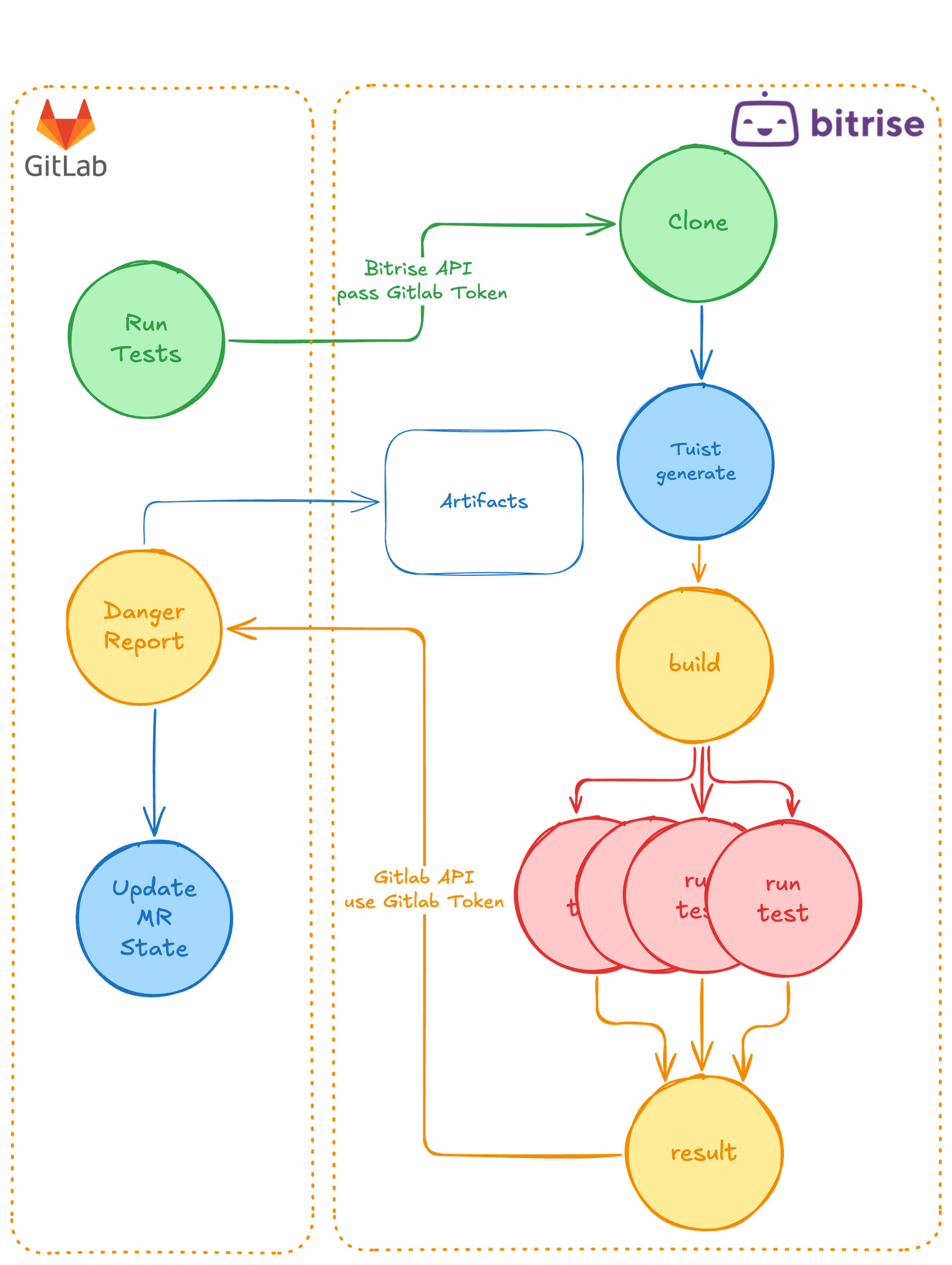

A successful pipeline looks like this:

The bird’s eye view looks like this:

Our “together but not mixed” approach proved that you do not have to choose between security and developer experience. After more than two years of running this system, the results speak for themselves:

Security achievements:

Developer experience improvements:

But the real victory is philosophical: we proved that security and convenience are not mutually exclusive. By inverting the traditional CI/CD model, we created a system that our teams trust and our developers love to use.

We are not done optimizing. On our roadmap:

Thanks for reading!

Staff iOS Developer